I figured I'd do this project to respond to all those people who were skeptical that the Open SciCal had any utility in the modern world. Well... my answer is yes.

I figured I'd do this project to respond to all those people who were skeptical that the Open SciCal had any utility in the modern world. Well... my answer is yes.

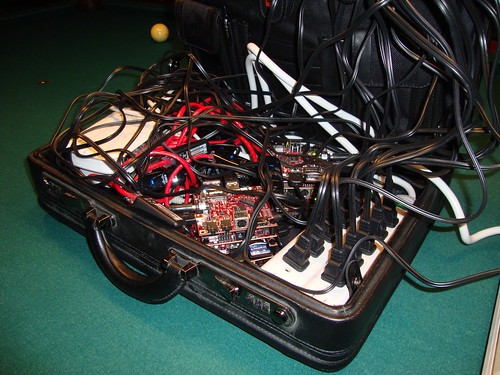

I took 10 BeagleBoards and turned a suitcase into a wifi-accessible, on-demand, elastic R computer cluster that runs at 10 Ghz, 40 Gigs of disk, and 1,000 megabits of networking bandwidth. I did this all for less than $2,000, and spent less than 5 hours building it, and now I have a scalable compute resource that is portable, has performance comparable to mid-range and high end servers that cost $15,000-30,000 from IBM or Sun. And better yet, it all runs at 30 Watts. That's less than most of the incandescent light bulbs in my room right now.

I've also made the whole project Open Source, for anyone who wants to replicate it on their own. Personally, I'm going to build another one out of 32 of these suckers, and rent it to the trading firm that bought dozens of Open SciCal's from me in the past couple weeks.

Step 1: Get 10 BeagleBoards

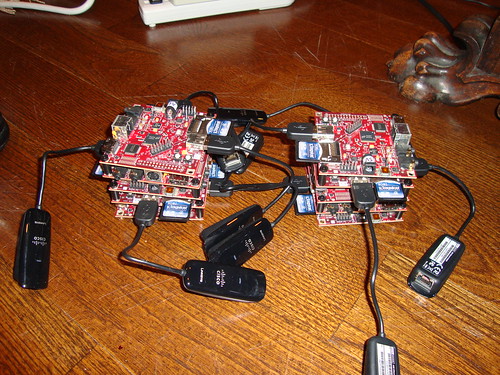

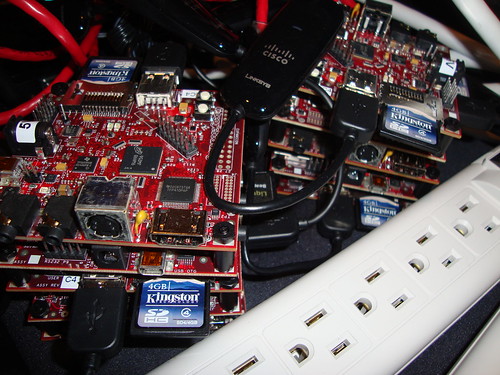

I took 10 BeagleBoards, and used the same standoffs that I use in the Embedded Gadget Pack. I used an offset pattern so they could stack as high as I wanted them to. I built them all the way up to 10, and then decided it wouldn't fit in a suitcase, so I split them up into 2 mini-towers of 5 BeagleBoards each.

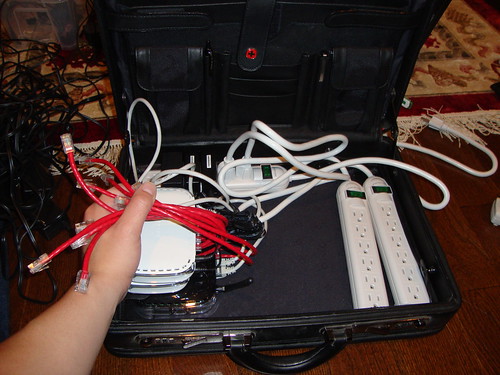

Step 2: Buy 3 cheap, low power hubs

I went to Radio Shack (begrudgingly because they sold out to the cell phone man in 2000 and have sucked ever since), since they were the only ones open. I bought 1 wireless router with 5 ethernet ports in the back, and then bought 2 netgear hubs (each had 5 ethernet ports).

Step 3: Wire the "interconnection backplane"

I've been reading old literature about multicore, multi-processor, scalable computer architectures, and they have a funky way of making simple concepts like "network" sound really complex like "Fat Tree Fishbone N-Way Scalable Interconnection Backplane".

Oh so you mean I plugged the two hubs into the wireless router, and then put 4 wires into each of the hubs, and 2 from the router, and connected them to the BeagleBoards with USB-to-Ethernet modules.

Like this:

I have this new mantra that things I read in hefty academic papers are written in language way too fancy for what they're trying to do. I get the sense that this is intentional, to hide the fact that what they researched could be replicated for $2,000 and 5 hours in modern times. I kid, partially. I'm reading papers written in the 1980's and 1990's, when the idea of doing what I just did would have taken a $10,000,000 DARPA grant.

Step 4: Set up the Open SciCal Slave Nodes

Each of the BeagleBoards is running the Open SciCal SD card from Liquidware, with a few notable additions:

-Each card is configured to allow passwordless logins over dropbear SSH

-Each card auto-configures itself to a static IP address

-The cards have a slightly trimmed down set of background apps to make R more responsive

I then replicated the card 10 times, onto each of my SD cards.

Step 5: Build the Power Backbone

This was an easy step conceptually, but a pain to do in an organized manner. So I gave up the organization, and just hacked through it. I took 10 BeagleBoard power connectors from the Liquidware shop (specifically those because they're low wattage). And connected them into two small outlet strips.

I then routed those power cables around and through to each of the 10 Open SciCal BeagleBoard Compute Nodes.

For kicks, I got one of those "kill-a-watt" meters and put it in front of the whole set up, so I could measure how much power the whole thing consumed.

Step 6: Configure the Master

Parallel architecture guys are always labeling nodes "master" and "slave". I think it's some hidden repressed anger at the fact that most of us "nerds" never got picked first for kick-ball and 4-square (the game, not the GPS website) when we were kids. Take that, 2nd grade. Now look who's deciding who's "master" and "slave". Me.

In all seriousness, at this point in the project, I had 10 networked Open SciCal nodes. But no way to issue code to them. So I took out my trusty Ubuntu Linux laptop, and quickly got it to connect to the wireless G router I'd set up to be the master switch for all 10 of the BeagleBoards.

Since the system is running on R, I naturally tried to get some of their default environments running, like Snow, Snowfall, svSockets, and even MPI. But each of those turned out to be serious overkill. Sure, they're easy to use if someone else is installing them for you and you don't have to think about it, but they didn't really get the job done in the amount of time I was willing to dedicate, so I wrote my own scripts.

Here are all of the programs I wrote, in one "app" on the "Open Source App Store".

Step 7: Write Elastic R Programs

I was hired by a company a couple months ago to write data mining algorithms to run on the Open SciCal. Most of them were top secret, but a few were pretty elementary. For instance, one function that is used often in text data mining is a function to extract all capitalized phrases. For instance, Amazon uses this on their website to summarize books with a few phrases.

Here's the R code that extracts the indexed location of any capitalized word in a piece of text:

x=readLines(file("data.txt"));

y=(unlist(lapply(x,function(x){lapply(x,function(x){strsplit(x," ")})})));

z=y[y!=""];

out=which(lapply(z,function(x){grep("[A-Z]",unlist(strsplit(x[1],""))[1],value=FALSE,invert=FALSE)})==1);

write.csv(out,file="out.txt")

This is a piece of code that you'd often want to run against 1,000's of pieces of text at a time, to extract important pieces of information. I wrote this into a program called "upper.r".

I then wrote some "administrative" functions for my homemade Elastic R Beowulf cluster - you can download them here:

"esh" - "elastic shell" - this runs a command on all of the slave nodes, and kicks back the output

For instance: esh "uname -a"

"ecp" - "elastic copy" - this copies a file to the home/root/ directory of each of the slave nodes

"epush" - "elastic data replicate push" - this takes a set of data files in the data/ folder called "1.txt" "2.txt" "3.txt" etc. all the way up to "10.txt" and copies them over to each node as /home/root/data.txt. This is important if you want to parallelize different data across to each of the nodes.

"epull" - "elastic data extract pull" - this does the inverse of "epush" in that it pulls a single file called /home/root/out.txt off each of the nodes, and renames them locally into the "out/" folder as "1.txt" "2.txt" "3.txt" etc. according to which node it came off

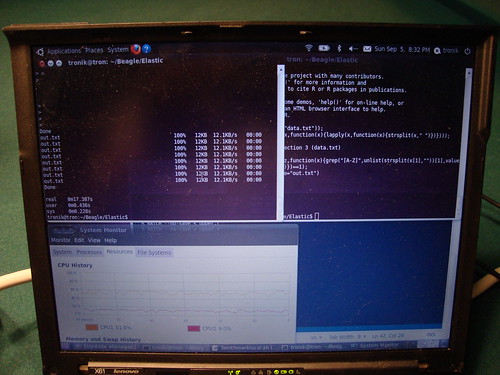

A typical session would look something like this:

esh "uname -a"

ecp upper.r

epush data.txt

esh "R BATCH < href="http://en.wikipedia.org/wiki/MapReduce">Map/Reduce". If you wrote about parallel computing in the 1980's, you would call it "Distribute/Evaluate/Collect". If you worked at Wolfram Media, you'd call it "ParallelDistribute/ParallelMap". Or if you hacked on the Cray, you'd call it "LoadVec/ParVec/PopVec". At NVIDIA you'd probably call this "CUDALoad/CUDAExecute". Or if you were the CEO of Amazon, I supposed you'd call it "Elastic Cloud Map Reduce" and then you'd make the programming API really obscure and difficult to develop for, and then charge a god-given arm and a leg to use it.

They all do the same thing: copy data to nodes, run them, and copy the output back.

Step 8: Benchmark and Go!

I wrote a couple of benchmark programs that basically run the upper.r code 40 times on a large chunk of text I downloaded from Project Gutenberg. The benchmarklocal.sh script runs the test 40 times serially locally on my laptop.

The benchmarkparallel.sh script runs the test 10 times in parallel, then repeats 4 times.

#!/bin/bash

./ecp data.txt

./ecp upper.r

./esh "R BATCH --no-save <>

The results are surprising to me, at least. The punchline is that, on average, the Dual-Core Intel chip takes ~30-35 seconds to complete the tests, while the BeagleBoard Elastic R Beowulf Cluster takes around ~20 seconds.

benchmarkparallel.sh is faster than my top-of-the-line $4,500 Lenovo work laptop running benchmarklocal.sh. Now there are always going to be skeptics saying, I could have optimized this or that, but that's not the point. I built it much faster than I could have normally...

Step 9: Take lots of photos

The sky's the limit. Or rather, the practical limit is my ability to appear socially uninhibited as I bring this suitcase into a conference meeting room, pardon myself as I plug it into the outlet (until I get a battery backup unit), and then run the thing at max speed as I calculate floating point math, and extract long capitalized phrases from anything in the room.

I uploaded the rest of the pictures I took in much higher resolution over on Flickr...

:-)

Enjoy...

10 comments:

Nice setup, and writeup! I might be using a very similar cluster in a robot soon.

Looks like you could use a decent 5V power supply and distribute DC instead. Oh, and I know how much you wish the original BeagleBoards had ethernet ports.

@Micah - well, feel free to use any of the code I wrote, and let me know if you need any help. yeah. ethernet on those boards would have saved an extra step in the setup for sure...

Nice job on the hardware.

How much are these guys paying you to code? Your example code could stand to go on a diet ;)

x = readLines("data.txt")

y = unlist(strsplit(x,split=" "))

z = y[y!=""]

out = grep("^[A-Z]",z)

write.csv(out,file="out.txt")

In R you don't need to end lines with ';' and you don't need curly braces in your one-line function definitions.

But otherwise good job on bringing R into new applications and new hardware!

You rule!

Way cool, and way different!

Just out of curiousity, why don't you use the newer Beagleboards for your next cluster?

Have you looked at the Gumstix Overo boards? They have a board they call a Stagecoach that networks up to 7 overo board to one Ethernet port.

If you use two stagecoach boards with 13 of the base overo and one overo with wifi, you just need 2 power packs and the whole thing would do the same as all those beagle boards and take a lot less space.

@Rando - thanks a lot :-)

@vaibhav - actually, the newer beagleboards have a few issues at the moment with their os stability... so although they're technically faster, I'm still hacking through getting them stable... maybe in a few months I'll have that fixed!

@dan - you know, actually i looked really hard at the gumstix boards, but they just don't do it for me. there's something missing, and the expansion ports on the beagleboard are what i really like, especially for what i'm planning to build next (dun dun dun). the gumstix have been around a really long time, but the beagleboard platform has a much wider development community in general...

Post a Comment