I got bored with my Kindle after about 20 times using it, and started wanting to hack it. I have a modern form of A.D.D. that makes me want to break open cases and solder memory expansion ports onto any device I touch. There's so much I'd do differently, yet you can't really do any development on it - I don't understand - they should just open it up, and let people like me hack away at it.

Since Amazon didn't, and doesn't, I decided to turn the Open SciCal into a hackable ebook reader, based on the FBReader source code. This means that it's hackable from the ground up...

It's not bad, but there are some things I'd change. Luckily I can, because the source is open and accessible... in fact, it's quite easy to understand - here's the important main function set, from FBReader, which gives a good idea of how approachable the code is (if you know C and C++, that is):

void FBReader::openBookInternal(shared_ptr

if (!book.isNull()) {

BookTextView &bookTextView = (BookTextView&)*myBookTextView;

ContentsView &contentsView = (ContentsView&)*myContentsView;

FootnoteView &footnoteView = (FootnoteView&)*myFootnoteView;

bookTextView.saveState();

bookTextView.setModel(0, 0);

bookTextView.setContentsModel(0);

contentsView.setModel(0);

myModel.reset();

myModel = new BookModel(book);

ZLTextHyphenator::Instance().load(book->language());

bookTextView.setModel(myModel->bookTextModel(), book);

bookTextView.setCaption(book->title());

bookTextView.setContentsModel(myModel->contentsModel());

footnoteView.setModel(0);

footnoteView.setCaption(book->title());

contentsView.setModel(myModel->contentsModel());

contentsView.setCaption(book->title());

Library::Instance().addBook(book);

Library::Instance().addBookToRecentList(book);

((RecentBooksPopupData&)*myRecentBooksPopupData).updateId();

showBookTextView();

}

}

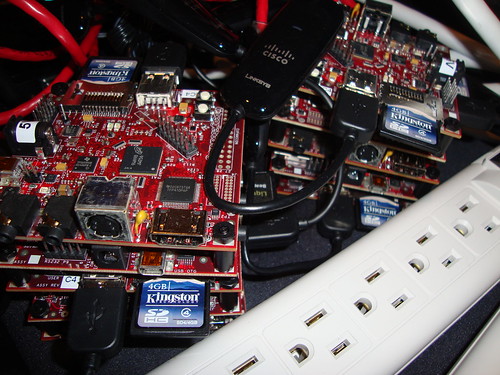

The Open Source Ebook reader is based on a handful of modules:

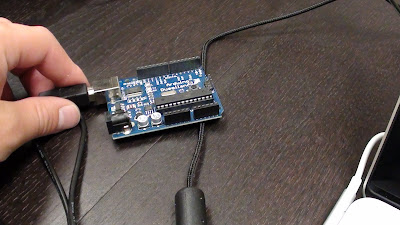

-BeagleBoard - the guts of my Ebook uses the TI OMAP

-BeagleTouch - it has a touchscreen OLED screen

-BeagleJuice - it's powered for 8 hours at a time with lithium ions

-FBReader - open source software, quite nice and hackable

-Liquidware Ebook Boot - an open source boot SD card with a tweaked version of Angstrom to integrate all the parts

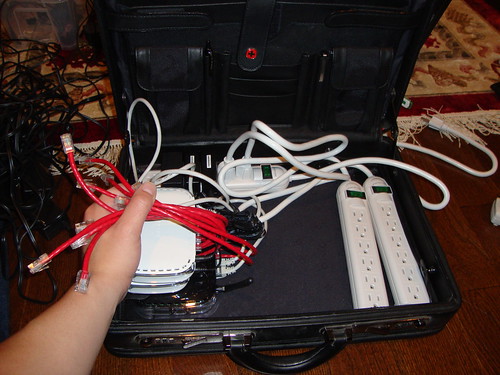

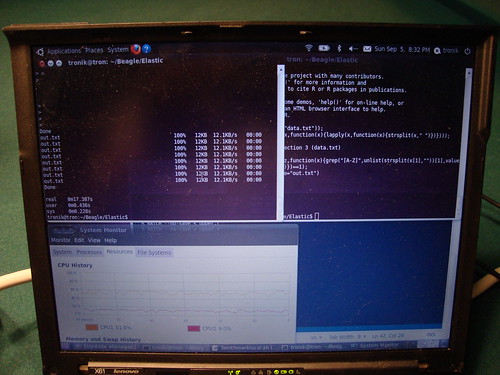

The base parts are pretty straight-forward, they just snap together and go. If you're a hacker, you could build it all together pretty straightforward-like from source and use Angstrom. But if you're lazy, you can just buy the "Ebook Boot" SD card which took Chris and Will and me about 2 weeks of hacking around to build, from source, from scratch. It includes all of the drivers needed to get the touchscreen working within Linux, and a handful of libraries and scripts to make wifi, power management, screen control, etc. work right out of the box...

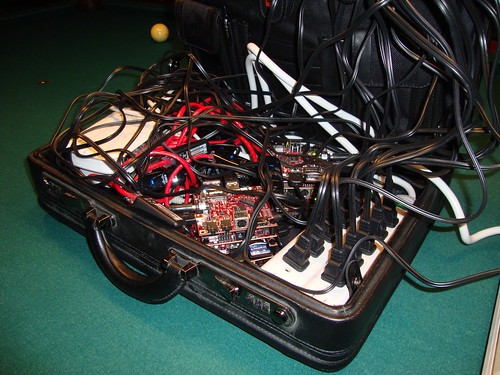

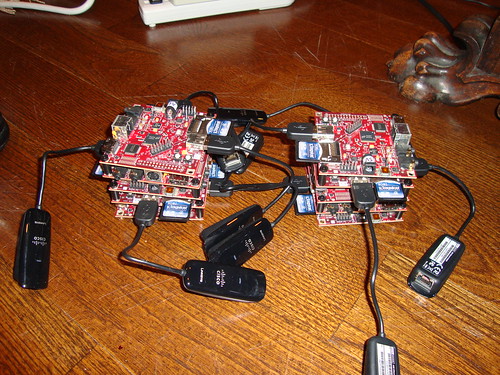

The fully combined stack of modules makes an Ebook:

Here it is from another angle, against a go table that I built by hand (those lines took forever, and were done with a heat iron, for wood carving, but it was so worth it):

This photo is probably the most "ironic" given it's a shot perhaps the most purpose-built hackable Ebook reader on top of the perhaps the most purpose-built non-hackable devices ever: a Mac laptop.

This is probably my favorite picture:

I haven't tried it yet, but conceivably, it could be connected to the net using the Wifi module, and then it could download Ebooks and free Ebooks from the various online stores that FBReader lets you connect to...

I've uploaded some pictures over on the flickr page, and the modules and finished EbookBoot SD Card are all available over at the Liquidware shop... I think I should run a timed contest against myself on how many different types of gadgets I can build within 4 minutes, like these guys that Make blog featured a little while ago...

:-)